The Responsible Artificial Intelligence Lab (RAIL) introduced the FACETS framework as a quantitative approach to measuring responsible AI at a lecture on Tuesday, 24th January 2023, virtually. This is part of RAIL’s objective to deepen the understanding of how responsible AI tools and frameworks can be developed and applied.

Prof. Jerry John Kponyo, the PI and Scientific Director, RAIL, stated that AI should be responsible for ensuring that AI solutions are delivered with integrity, and equity, respecting individuals and always being mindful of its social impact. “There is a need to evaluate AI solutions according to established standards (ISO 26000) and frameworks (FACT, FATE, FACETS),” he added. In addressing the quantitative view of responsible AI, Prof. Kponyo mentioned the need to provide a measure of how responsible an AI solution should be and an agreed standardized framework that helps compare AI solutions’ performance globally. “A quantitative measure limits subjectivity in evaluating AI solutions,” he asserted.

He introduced the FACETS framework as an attempt by the AI research labs in the AI4D initiative to propose a measure through which responsible AI could be measured. He said credit for this framework should be given to RAIL in KNUST Ghana, CITACEL in Burkina Faso, and the University of Dodoma Tanzania when used. He added that the governing document, “FACETS Responsible AI Framework,” is proposed to promote and objectively measure the responsible nature of the activities and innovations from AI Labs.

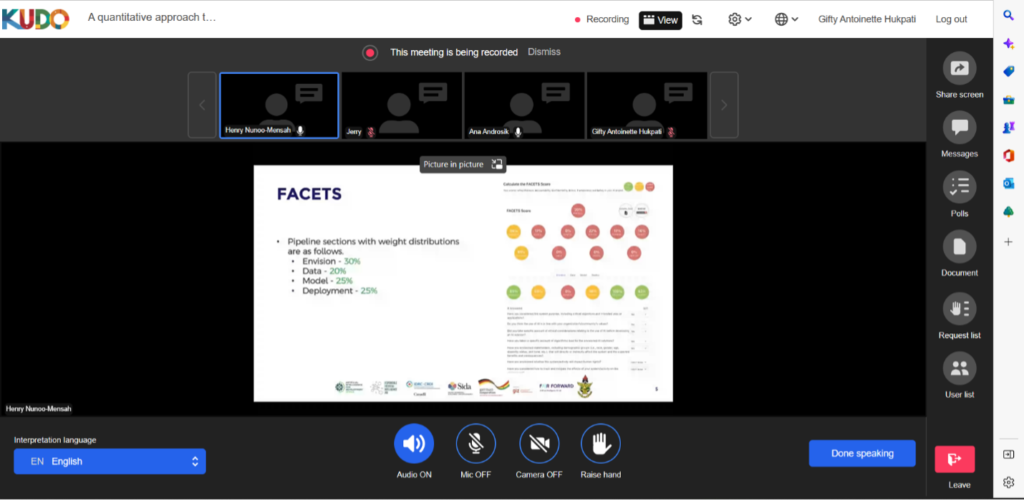

He indicated that FACETS considers a pipeline that captures the whole process from envisioning to deployment in measuring performance and considers the various stages of the AI innovation process. He revealed that F, A, C, E, T, and S stands for Fairness, Accountability, Confidentiality, Ethics, Transparency, and Safety measures, respectively, and the overall weight distributions for the various pipeline sections are as follows:

- Envision – 30%

- Data – 20%

- Model – 25%

- Deployment – 25%

He said RAIL seeks to collaborate with key research laboratories globally to drive the frontiers of the responsible use of AI. “These considerations provide an objective and fair assessment of AI innovations in our Research Labs,” he added.

Dr. Henry Nunoo-Mensah, the Programmes Coordinator of RAIL, took participants through the ChatGPT assessment using the FACETS framework. He said the FACETS framework covers four critical stages of the development process: envision, data, model, and deployment. He presented a step-by-step procedure using the FACETS framework to measure the reliability of the ChatGPT platform using the framework pipeline.